- Chat with RTX works on your PC without needing the internet.

- You can give it documents, PDFs, text files, and YouTube video links to make it smarter.

- It's free, quick to set up, and keeps your data private.

February 15, 2024: Nvidia has introduced a new AI chatbot called “Chat with RTX,” designed to run directly on Windows PCs with Nvidia GeForce RTX graphics cards.

This chatbot is unique because it operates locally, meaning it doesn’t require an internet connection to function.

Nvidia aims to offer a personalized AI experience by allowing users to feed their own documents, text files, PDFs, and even YouTube videos into the chatbot.

This information then powers the chatbot’s responses, making it a useful tool for quickly finding information or getting summaries of videos and documents.

“Chat with RTX” is built using Nvidia’s advanced technologies, including GPU acceleration and retrieval-augmented generation (RAG) technology.

This setup enables the chatbot to swiftly scan and index the provided files, offering fast and contextually relevant answers to users’ questions.

Despite being a powerful tool, the chatbot has some limitations, such as not being able to carry context over between different questions and sometimes struggling with follow-up queries.

However, Nvidia is committed to improving “Chat with RTX” over time.

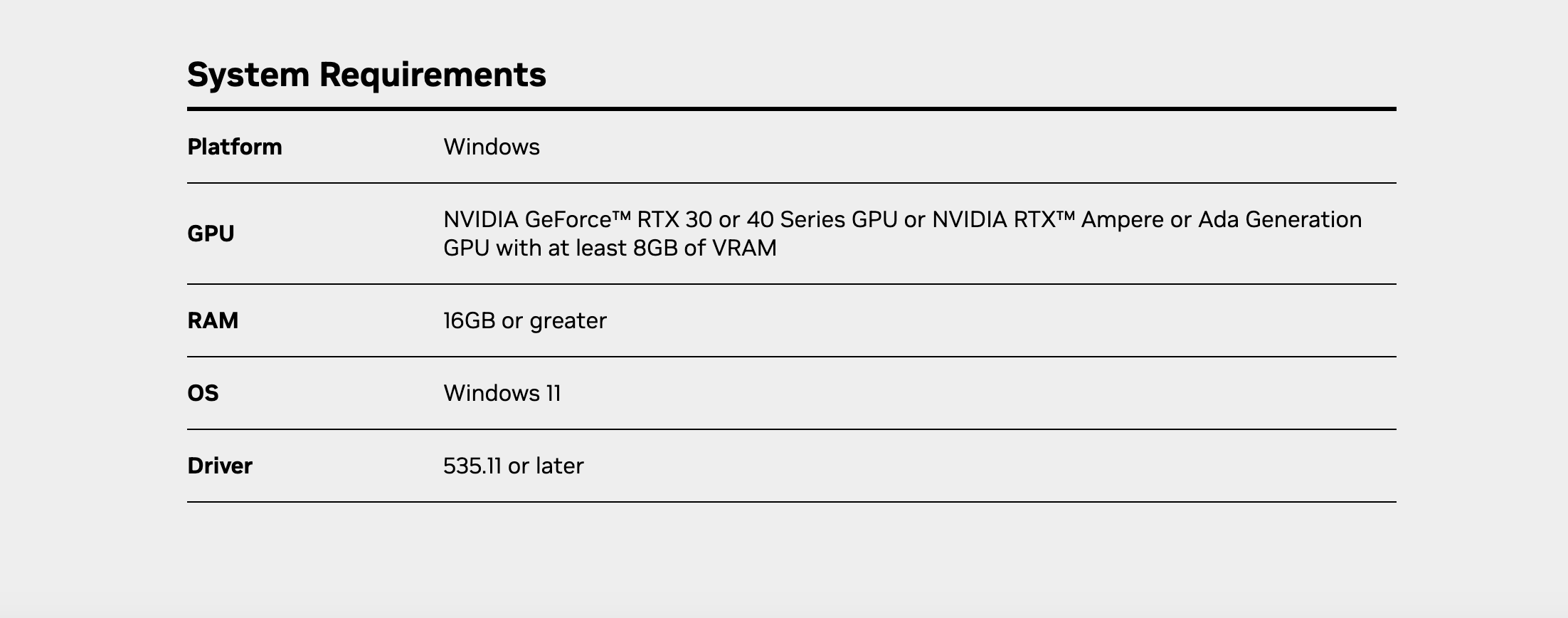

The chatbot is available as a demo and is free to download for anyone with a GeForce RTX PC that meets the necessary hardware requirements, which include having Windows 10/11, an RTX 30/40 GPU with at least 8GB of VRAM, and the latest Nvidia drivers.

Moreover, Nvidia is encouraging developers to explore the potential of RTX-accelerated AI applications by sponsoring a contest for creating generative AI apps and plugins using the TensorRT-LLM framework showcased by “Chat with RTX.”

With “Chat with RTX,” Nvidia is not just providing a tool for personal use but also demonstrating the capabilities of local AI processing, which can offer quick responses and keep sensitive data secure on users’ devices.

This initiative represents a step forward in making AI more accessible and customizable for individual needs, all while ensuring privacy and data security.